Introduction

Defined as ‘the registration, analysis and representation of visual data by machines and algorithms’ (Rettberg et al., 2019: 1), machine vision is increasingly settling into mainstream popular culture. From drones to generative AI tools, recent years have seen a normalisation of these technologies and a sharpened scholarly critique of their implications. While early theories tackling military uses of ‘vision machines’ (Virilio, 1994) and machine-readable ‘operational images’ (Farocki, 2004) focus on the increasing autonomy of machine-driven decision-making, more recent accounts have urged a recognition of the human labour involved in the making-readable of such imagery, as well as the salience of machine-operated categorisation that affects humans (Buolamwini and Gebru, 2018). For this reason, this paper approaches machine vision as always being entangled in establishing or challenging different kinds of subjects: be it the machinic other, the labouring data worker, the surveilled (and often marginalised) biopolitical subject, or the viewing public as a political collective to be activated and even mobilised. Depending on the scholarly perspective taken, these socio-technical subjects may be ambiguously defined, forcefully essentialised, or granted varying degrees of agency. What this paper addresses, however, is how they can be embodied and assembled through new media art. In this respect, I comment on a series of artworks that critically engage with machine vision technologies, emphasising how they manage to place the classification dynamics of machine vision in a productive aesthetic dialogue with the open connectivity of social media.

In terms of aesthetics, AI and AI-produced art have been famously discussed by scholars such as Steyerl (2016), Manovic (2018), and Zylinska (2020), and also tested in an exhibition space by pioneering artists such as Memo Akten and Mario Klingemann, who have investigated the technicity of the human gaze (Celis Bueno and Shultz Abarca, 2021) and the autonomous creativity of neural networks (Klingemann, 2018). However, rather than focusing on the authenticity of the algorithm as a subject, its accuracy or its creativity, I choose machine vision’s potential to produce subjects that go beyond individual representation (Uliasz, 2021). In doing so, I put AI-related literature in dialogue with theories of assembly (Parry, 2022) and collective subjectivation (Dean, 2017; Berardi, 2018), zooming in on tagging as a gesture that emancipates the performance of digital identities into social imagination. I build on the concept of ‘tagging aesthetics’ (Bozzi, 2020b) to discuss the different degrees by which artworks can concatenate different techno-social subjects, making their relations apparent, as well as contributing to their emergence as socio-technical (re)assemblages.

The choice of tagging as a pivotal concept is in line with recent calls to address AI as a socio-technical system (Sartori and Bocca, 2022; Aradau and Bunz, 2022), particularly with the goal of consolidating a dialogue between emerging AI scholarship and ongoing social media critique (e.g. see Geboers and Van de Wiele, 2020). Furthermore, tagging is specifically resonant with AI studies and machine vision, as it is an operational form of labelling. By ‘operational’ I mean that, while often encoded by humans, tagging is nonetheless conceived for machine processing. One premise, however, is relevant.

Rather than map or visualise hashtags or tags as data and/or a strictly taxonomical tool, I propose tagging as a verb that encompasses a range of heterogeneous labelling practices, including geotagging and the @-ing of users. While ‘labelling’ is more familiar and commonplace in AI-related literature, usually referring to the process of cleaning and classifying data, my framing of ‘tagging’ in the context of machine vision is intentionally broader. Here, tagging encompasses both user-enacted and algorithmically operated labelling practices that make the production of subjects and their interrelations not only more visible, but also potentially addressable as part of a socio-technical assemblage. The political connotation of tagging has been famously noted in relation to protest movements on social media, but I argue that these culturally exploded tagging aesthetics are now crucial to understanding how the fraught nature of classification is increasingly worthy of scrutiny, as the more covert forms of labelling that enable machine vision become naturalised. In other words, I extend the user-centric taxonomical function of tagging to include label-driven algorithmic classifications, to emphasise both the inherently reductive character and the performative potential of socio-technical classification.

By tapping into novel tagging aesthetics, new media artists can leverage their privileged position in the ethical assessment of new technologies (Stark and Crawford, 2019) to open up the definition of new socio-technical configurations, enabling negotiations between machinic and social subjects. The pivotal importance of new media art in my discussion then lies in its potential to illuminate which kinds of subjects are created by acts of tagging and how they can be (re)assembled, ultimately demonstrating different ways of performing the techno-social and shaping future cultural encounters with various forms of others.

Conceptually, ‘tagging aesthetics’ reinforces the dialogue between ‘relational aesthetics’ (Bourriaud, 2002) and other media theory constructs such as the ‘Stack’ (Bratton, 2015) or the ‘metainterface’ (Pold and Andersen, 2018). With this premise, the first section of this article reviews key literature to outline the faceted subject(s) of machine vision and the main conflicts inherent to their definition. In so doing, I situate my intervention within the current debate and gesture towards new media art as my main object of discussion.

The second section delves into tagging as the pivotal conceptual device of this paper, highlighting how it is useful to bridge a theorisation of collective subjects on social media, and subjectivation in the age of machine vision. The section is important not only to outline my theoretical framework, but also to highlight the importance of tagging as a concept to address the ambivalent nature of socio-technical imagination. On the one hand, tagging is inherently grounded in socially reductive processes of categorisation, while on the other hand (in the context of social media, as well as the artworks discussed) it also demonstrates a level of interconnectedness, co-presence and even agency.

The final section explores a range of new media artworks. I start with the seminal ImageNet Roulette (2019) by Kate Crawford and Trevor Paglen, before a more detailed examination of works by Dries Depoorter and Max Dovey. My overview of each artwork illustrates different levels of compromise and agency in the co-imagination of multiple subjects alongside machines, focusing in particular on three different approaches to tagging aesthetics: (dis)identification, semi-automated assembly and embodied encounter. Finally, this paper concludes by assessing the benefits and limits of tagging aesthetics as a critical tool to understand the formation of socio-technical subjects in the age of digital platforms and distributed machinevision.

The Subject(s) of Machine Vision

If we look at AI as a socio-technical system (Aradau and Bunz, 2022; Sartori and Bocca, 2022), it is important to discuss the main identity conflicts inherent to this debate, and the subjects they produce. I look at four of these identity conflicts: the ontological conflict between subject/object – human/machine; the biopolitical conflict between the machinic viewer and the observed subject; the socio-technical conflict between the humans designing the algorithms and those performing data work; and the political conflict between the viewing public and AI as a system.

Since I approach machine vision through examples of new media art, it is important to emphasise that the role of aesthetics in my framing is specifically to resolve the inherent ambivalence and incommensurability of the aforementioned ‘subjects’, which belong to heterogenous notions and perspectives. I propose that it is precisely in the tangible ambiguity of new media art that, by leaning into what critic Nicolas Bourriaud (2002) termed ‘relational aesthetics’, artists can (re)assemble those subjects in new productive configurations. Characterised by enacting loosely defined social relations within a gallery space (e.g. making food for visitors), relational art has been famously criticised for its excessive embeddedness into homogenous social milieus and lack of antagonism (Bishop, 2004). However, more recent accounts have also recuperated it in the context of social media and social media-aware interactive art (Bozzi, 2020b; Spartin & Desnoyers-Stewart 2022). This relational emphasis is intimately connected to the way that I use the terms ‘subject’, ‘subjectivity’ and ‘subjectivation’ in this paper. Bourriaud addresses the production of subjects through art with reference to Felix Guattari, for whom art can help ‘seize, enhance and reinvent’ subjectivity, creating ‘potential new models’ for human existence and ‘new agencies’ within existing categories (Bourriaud, 2002: 88–89). In other words, art can de-naturalise and deterritorialise subjectivity by ‘unsticking it’ from the individual and re-mapping it onto new arrangements, including heterogeneous pairings with the non-human – such as socio-economic and informational machines (Bourriaud, 2002: 90–91). Beyond art, media theorists like Franco ‘Bifo’ Berardi have also explicitly linked Guattari’s characteristically ambiguous notion of subjectivity to the need for new techno-poetic platforms for the subjectivation of cognitive workers in the age of social media (Berardi, 2018: 156).

Given the entanglement of aesthetics and relationality in this notion of subjectivity, in this paper the ‘subject’ is thus bound to representation in a twofold sense: representation as something that is defined and made sensible through art; and representation in a more political sense, as a subject aware of itself and thus implicitly endowed with potential agency. I approach the subjects of machine vision as they emerge and naturalise through different conflicts that pit them in juxtaposition to each other, before I propose art as a way to (re)assemble them and denaturalise them.

The first conflict is the ontological juxtaposition of object and subject, which is at the core of some of the most influential analyses of machine vision. On the one hand, this contrast implies a reversal of perspective: ‘Now objects perceive me’, writes Paul Virilio, quoting Paul Klee (1994: 59). There is also a representational issue: artist Harun Farocki famously discusses aerial war pictures and the disappearance of the human from them, coining the term ‘operative images’ to describe ‘images that do not represent an object, but rather are part of an operation’ (2004: 6). These realisations might result in apocalyptic techno-determinism (Bostrom, 2014) or the embrace of posthuman hybridity (Haraway, 1985; Hayles, 1999), but the stakes are definitely ontological. As objects gain awareness and agency, while human subjects are increasingly dependent on the non-human, will we start to empathise with the machine and challenge our own subjecthood? Or will this dichotomy naturalise into an unbridgeable gap, essentialising both subjects and objects into an existential struggle? To find out, we need to address more questions, for example: where does the process of machine vision start? Whose agency does it extend, and whose is restricted?

This leads to the second conflict, which is biopolitical. This juxtaposition puts a multitude of surveilled human subjects against the latent one produced within the machinic viewer.

In this respect, Uliasz (2021) explores the relationship between what Amoore (2013) terms the ‘emergent subject’ of algorithmic vision and Farocki’s operative image, by taking the example of a multi-target, multi-camera university surveillance system, used to collect a large-scale image database depicting students, staff and passers-by. Uliasz interrogates the representational value of those images in relation to subjectivity: since the system does not index people as individuals, thereby not representing anyone, what is the subject being produced? According to Uliasz, ‘algorithmic patterning facilitates decision-making processes based on what is not present to account for what possibly could be’, which means that ‘algorithmic hallucination of subjects as a function of biopolitical control becomes a performance, run amok of an archive’ (Uliasz, 2021: 6). This performance produces subjects that are not really there, but are always latent and waiting to be manifested into visualisation by the algorithm. Going back to the algorithm/machine as a subject itself, the point is then not so much to perceive or see something or someone, but ‘to produce a world of relations, the grounds from which subjects are made, seen, and named’ (Uliasz, 2021: 8). This relational potential is crucial to this paper’s framing of tagging and it is also grounded in the third subject juxtaposition, which is of particular interest here.

The third conflict is the socio-technical split between AI system designers and data workers. The labelling of data is the process whereby this conflict and the biopolitical conflict mentioned above intersect. Suchman argues that AI is ‘a cover term for a range of technologies of data processing and techniques of data analysis based on the iterative adjustment of relevant parameters, according to some combination of internally and externally generated feedback’ (Design Lab 2020, cited in Aradau and Bunz, 2022: 12). This suggests that the setting of those parameters and the generation of that feedback entails the production of layered, juxtaposing human subjects: the engineers and technicians who design and programme the system for data extraction/collection, the people who clean the data and organise it according to the rules set by the engineers, and finally, the living, embodied groups of people to whom (at least in intent) the social categories that are defined and classified correspond. This has different intersectional implications, as dataset curation is famously marked by cultural assumptions that can impact marginalised communities in different ways, by virtue of both the machinic ignorance of contextual information and the inefficient, productivity-driven logics of data work. The way algorithmic sorting is imbued with problematic ideological baggage has been much discussed in recent years (for examples, see O’Neil, 2016; Noble, 2018; Chun, 2018; Buolamwini and Gebru, 2018; and Amaro, 2023), focusing particularly on how automated decision-making processes are based on badly curated datasets, which rely on underpaid work and dated taxonomies that end up amplifying existing social inequalities. In particular, Scheuerman et al. (2020) explore the role of database annotation in relation to race and gender, noting a lack of shared definitional standards (58: 20) and a limited focus on visible markers, ultimately suggesting the incorporation of self-identification at the stage of data collection (58: 25). Also in terms of agency, Aradau and Bunz (2022: 10) point out that the system relies in particular on dispersed and underpaid microworkers, so that ‘the data “cleaner” becomes in fact the other of the tech worker’ (14–15). This relationship is partially obscured from the very practitioners who use data annotation services, with the result that the work is often misunderstood (Catanzariti and Bennett, 2022). At the level of both classification and annotation, then, socio-technical subjects are defined, delineated, juxtaposed and ultimately naturalised in social hierarchies.

The final conflict is a political one. How is the collective viewing public made aware and perhaps even activated as a subject by the encounter with AI and machine vision? How are the identity conflicts mentioned above represented? Just as important, is visibility enough to grant political activation as a collective subject? Surveying artists directly, Stark and Crawford (2019: 443) approach the ethics of digital art practice by identifying and interviewing those among them working with facial recognition technologies as a ‘significant but often undervalued community’. According to Stark and Crawford, their interviewees deploy ‘defamiliarisation’ in two ways: either making unfamiliar elements of computational technology seem normalised and domestic, or presenting everyday abstracted objects and practices as alien or discomforting (446). For the authors, this approach is critical to what Walter Benjamin called for in his seminal take on art and politics:

Art acknowledged as both a product of collective sociotechnical endeavor – as made by many people working together – and for a collective audience was for Benjamin the most effective means to transcend habituation, shocking viewers into a reconsideration of their political situation. (Stark and Crawford, 2019: 447).

Again, such a framing suggests the coalescence of at least two subjects around the representation and enactment of machine vision in media art: the socio-technical subject of those labouring to enable the application of the technology, and the viewing public/interacting audience. However, there are challenges to this dehabituation (or, again, denaturalisation). One is the ‘habitual’ nature of digital platforms, where a collective ‘we’ is atomised into a multitude of YOUs (Chun, 2016); the other is the limited impact of ‘legibly political art’, which too often confuses the making visible of an issue with empowerment against it (Gogarty, 2022).

What can we expect, then, from tagging aesthetics? In the next sections I flesh out this concept and comment on a range of machine-vision-related artworks. The goal is to understand how the aforementioned subjects can be revealed in their conflictual relationships and performatively (re)assembled in a critical way to denaturalise them and ‘dehabituate’ them to machinic classification.

Tagging and Machine Vision

As outlined before, scholarly discourse on machine vision often tackles the ideological connotation of classification and labelling, as well as the labour inherent to it. Bowker and Star (1999) offer a relevant account of classification systems in general, explored as ideologically determined and continuously maintained infrastructures that rely on categorical work (286). For Bowker and Star, naturalisation means ‘stripping away the contingencies of an object’s creation and its situated nature’, leading it to lose its ‘anthropological strangeness’ (299). When it comes to human subjects, acknowledging the contingency and situatedness of classification is then crucial to countering the naturalisation of oppressive categories, a tradition cultivated by feminist and race-critical theory (308). Significantly, according to Bowker and Star, classification systems are both material and symbolic, which makes a category something ‘in between a thing and an action’ (1999: 285–286). It is from here that I wish to approach tagging.

From early discussions of ‘folksonomies’ (Vander Wal, 2007) to more recent explorations of activist movements (De Kosnik and Feldman, 2019; Florini, 2019), tags and hashtags have been widely discussed not only as foundational features of the participatory web, but also as complex socio-technical objects that intervene in processes as diverse as bottom-up knowledge organisation and networked identity (Losh, 2019; Bernard, 2019), with especially fraught implications in terms of the latter (Dame, 2016; De Kosnik and Feldman, 2019). There are, however, other ‘taggings’ on social media that afford different kinds of identity labelling and are not usually discussed as such alongside hashtags. This is due to their structural incommensurability – in other words: they are not easily scraped and mapped alongside each other in a cohesive fashion. One example is the tagging of users operated by ‘@-ing’ them to attract their attention or elicit a response, and another is the geotagging of a post with a specific location, linking that post with a whole constellation of heterogeneous content. From this broader perspective, tagging is the creation of a label that taps into a socio-technical infrastructure, materialised into perception for both humans and algorithms.

But what is the relevance of tagging to machine vision, also considering the identity conflicts outlined above?

First of all, machine vision is already present in social media and does involve covert, automatic forms of tagging. From Facebook’s controversial automatic face-tagging algorithm (Aguado, 2011) to Google infamously tagging black people as ‘gorillas’ (Dougherty, 2015), different techno-social taggings have punctuated critical discussions on social media as infrastructures for surveillance and social sorting. However, these practices are also ‘business as usual’ in everyday marketing operations. Image classification is a key element of the translation of cultural experiences into machine-readable data (Carah and Angus, 2018) and hashtags are crucial to fill in the socio-technical context missing from automatically assessed social media images (Geboers and Van de Wiele, 2020). Significantly, the automated or semi-automated taggings of machine vision might be seen as inherently different from the ‘active’, user-driven taggings discussed in relation to social media, but they are interlinked. Even companies like Netflix sometimes rely on human ‘taggers’ to convert nuanced cultural subgenres into computable categories for their system (Finn, 2017: 90–91). Social media tagging teaches us that simple gestures can materialise the invisible layer between human users and algorithmic intelligence, representing a crucial point of intersection between culture and technology, and perhaps serving as a key site for the re-imagination of collective identities. Scholars have been paying increasing attention to this issue: for example, Finn (2017: 2) writes about ‘culture machines’ as ‘assemblages of abstractions, processes and people’, while Chun (2016; 2018; 2021) has often highlighted the need for rediscovery of history and identity politics within the realm of big data and pattern recognition. Machine vision encapsulates the socio-technical potential of social media tagging and converts it into a supervised form of social imagination, hiding it behind its infamous opacity (Burrell, 2016). In other words, machine vision naturalises relationships across heterogeneous subjects, making those relationships disappear. How can we leverage the concept of tagging in this context, then? Instead of focusing on tags and hashtags solely as things, in this paper I reframe tagging as an action. More specifically, I propose tagging as an operational gesture that stitches together and conjures up different subjects, performatively linked in a relation that makes some of them visible. Tagging aesthetics, as I will explore in more detail in the next section, are then critical labelling practices that not only attempt to make more of those subjects visible, but also to (re)assemble them into a more inclusive and self-aware socio-technical formation. Following these taggings – be they enacted by users or by an algorithm – might help unravel the subject(s) of machine vision and denaturalise the ways they are socio-technically determined, reduced and shaped.

Tagging Aesthetics in AI-Driven Media Art

The previous sections have outlined the multiple subjects and conflicts engendered by machine vision, and also introduced tagging aesthetics as a critical framework to unpack how this happens through new media art. This section pulls the two elements together by discussing artworks that deploy different forms of tagging to assemble heterogeneous subjects while denaturalising their relationship with machine categorisation.

(Dis)identification

Trevor Paglen and Kate Crawford’s ImageNet Roulette (2019), probably the most viral example of the ‘archeology of datasets’, is a textbook example of how things can get thorny when machine vision is used to classify people (Figure 1). It was presented as both a website and an installation, where people were allowed to be ‘recognised’ by a machine vision algorithm trained exclusively on the images and labels in the ‘person’ category of the ImageNet dataset, which is normally used for object recognition (Paglen and Crawford, 2019). ImageNet Roulette invited people to submit themselves to the machinic gaze through a camera or webcam, and then witness their own likeness bound by the characteristic green rectangle and ‘tagged’ according to the labels the algorithm was trained on. This playful experiment combined the precise aesthetics of the boundary box (framing one’s face in real time) with the sometimes grotesque inaccuracy of the associated tags, generating a cultural glitch and exposing the taxonomy undergirding the vision process.

Twitter post by Kate Crawford, @katecrawford, 20 September 2019, https://twitter.com/katecrawford/status/1175128978274816000 (last accessed 4 October 2023). Screenshot by the author.

Material about ImageNet Roulette explained how the images from the dataset had been originally labelled by low-paid Amazon Mechanical Turk (MTurk) workers, who had to manually categorise them using the notoriously biased WordNet semantic structure (Goriunova, 2020: 3). As a consequence, labels included sexist and racist elements such as ‘slut,’ ‘rapist,’ ‘Negroid,’ and ‘criminal’. After the project went viral and made media headlines, the dataset creators eventually expunged 438 human categories from their database, along with hundreds of thousands of images (Ruiz, 2019).

Overall, the project was very successful in exposing the bias embedded in the famous dataset, as well as raising awareness about the way machine vision works. Regardless of its material outcome, however, ImageNet Roulette unravelled a relational chain encompassing various subjects, bringing some to light and inviting others to participate. Questioning the ‘de-biasing’ of the database that followed the project, Olga Goriunova asks:

The question is, where is the racism exhibited by ImageNet Roulette located? Is it in the WordNet that includes inappropriate and offensive categories and words? Is it in the workers who chose those ‘person’ categories? Is it in the 100+ countries whose cultures informed the labeling of the images? Is it enhanced by the system of the Mechanical Turk? Is it now inscribed into the ImageNet dataset? Is it something the creators of ImageNet must have considered and tried to counter-act at the point of design? (Goriunova, 2020: 4).

Goriunova’s questions suggest that the issue of bias cannot be resolved by an art project alone, even if viral, but they also follow the chain of techno-social relationships enacted and interrogated by it – a process of re-situation that gestures towards a denaturalisation of the classifications operated by machine vision.

Lauren E. Bridges (2021) discusses the same artwork, offering some useful insight in the context of a theory of ‘digital failure’ and ‘unbecoming’ good data subjects. Bridges draws on the concept of ‘disidentification’ (Muñoz, 1999) and focuses on those glitches in identification that, while emerging from mischief and irony, lead to the ‘failure of sociotechnical subject formation in post-modernity’ (2–3). In the case of ImageNet Roulette, disidentification happens in two ways.

Firstly, Bridges (2021) breaks down the role of the ‘bounding box’ as a site of critical inquiry. The green box is akin to a ‘boundary object’ (Leigh-Star and Griesemer, 1989) that represents ‘captive data’, helping the machine see like us, but also highlighting miscategorisation as it happens. Linking Bridges’ reading to the biopolitical conflict discussed earlier in this paper, we could argue that the familiar green box pulls the non-human into the screen, opening the frame of surveillance to reflexivity (we see the machine seeing us). Among the complex concatenation of discursive elements deployed by ImageNet Roulette – text, website, installation, Twitter response – manifesting the green square, it is then important to discuss how machine vision becomes a discursive object through aesthetics.

Emphasising a different aspect of the same project, Bridges (2021) also notes how ImageNet Roulette’s appeal lay in ‘the intense affective response that it generated online, which flowed between disgust and outrage, to amusement and play’ (8). Sharing one’s miscategorisation on Twitter created for Bridges ‘an affect of pleasure and solidarity’, a moment when humans used digital failure to delegitimise reliance on fallible systems.

This account emphasises the failure of subject formation, although we could also argue that, while feeding one’s face to the algorithm and submitting to machinic tagging was initially an individual experience, the mislabelled subjects of machine vision also voluntarily ‘tagged’ themselves into a collective conversation on the social platform. By using the platform’s connective affordances of linking, replying, hashtagging and @-ing to plug their usernames into this conversation, they established a material network of ‘data fugitives’ (to use Bridges’ term). These data fugitives thus evaded one chain of subjectivation, arguably engendering another subject altogether. In response to the socio-technical conflict discussed at the beginning, this subject arguably amounts to the residual category that, according to Bowker and Star, is never missing from any taxonomy (1999: 39). In terms of the political conflict mentioned above, the affective Twitter ripples of ImageNet Roulette also turned users’ faces and their emotional reactions into a plural feeling, a form of what Jodi Dean has described as ‘selfie communism’ (2017, 6). Dean writes: ‘The face that once suggested the identity of a singular person now flows in collective expression of common feelings. Reaction GIFs work because of the affect they transmit as they move through our feeds, imitative moments in the larger heterogeneous being we experience and become’ (6). With ImageNet Roulette, ‘reacting’ to one’s own likeness as it is operationalised and miscategorised by the algorithm similarly draws people, and not only their attention, into a common conflict that pulls them together as a subject. What comes after their collective assembly – the de-biasing of the dataset, for example – is in this respect secondary to their coming together and showing up as addressable, @-able constituents. ImageNet Roulette is thus a seminal work in terms of tagging aesthetics: socio-technical subjects and their relations are not only made visible, but performatively re-assembled into moments of affective synchronisation. At different degrees, these two defining tensions (one leaning towards the technical, one towards the social) are present in all the other works I address in this paper.

Semi-Automated Assembly

Beyond its taxonomical function, tagging is about connection. As a broader techno-cultural form, it in fact enacts a type of performative ‘assembly’, something Kyle Parry (2022) defines as ‘any combination of expressive elements that maintains and seizes on the appearance of selection and arrangement’ (3). For Parry, while other cultural forms focus on a cohesive whole that supersedes its parts, assembly is instead about ‘constituents remaining discernible, separate, in conversation and sometimes in conflict’ (2022: 4). In terms of representation, it encourages a type of ‘plural reading’ that encompasses a range of ‘exploratory relations’ that are often quite unfamiliar, understudied and ‘unnatural’ (17–21). Significantly, Parry explicitly mentions the hashtag #jennyholzer or users such as @jennyholzerbot on Instagram as examples of assembly (82–83). From this relational perspective, the assemblages produced through the different taggings I discussed are then incohesive, yet materially constituted collective subjects that involve human, technical, cultural and social concatenations.

Geotagging represents a similar gesture, where the label is a technical stand-in for a physical location that the tagger is ‘pulling’ themselves towards. In terms of identity and addressability, geotagging is often an easy way to tap into socio-cultural capital or signal proximity to specific milieus (Beekmans, 2011; Bozzi, 2020a). This element figures prominently in the work of Belgian artist Dries Depoorter, which speaks to tagging and subjects in different ways.

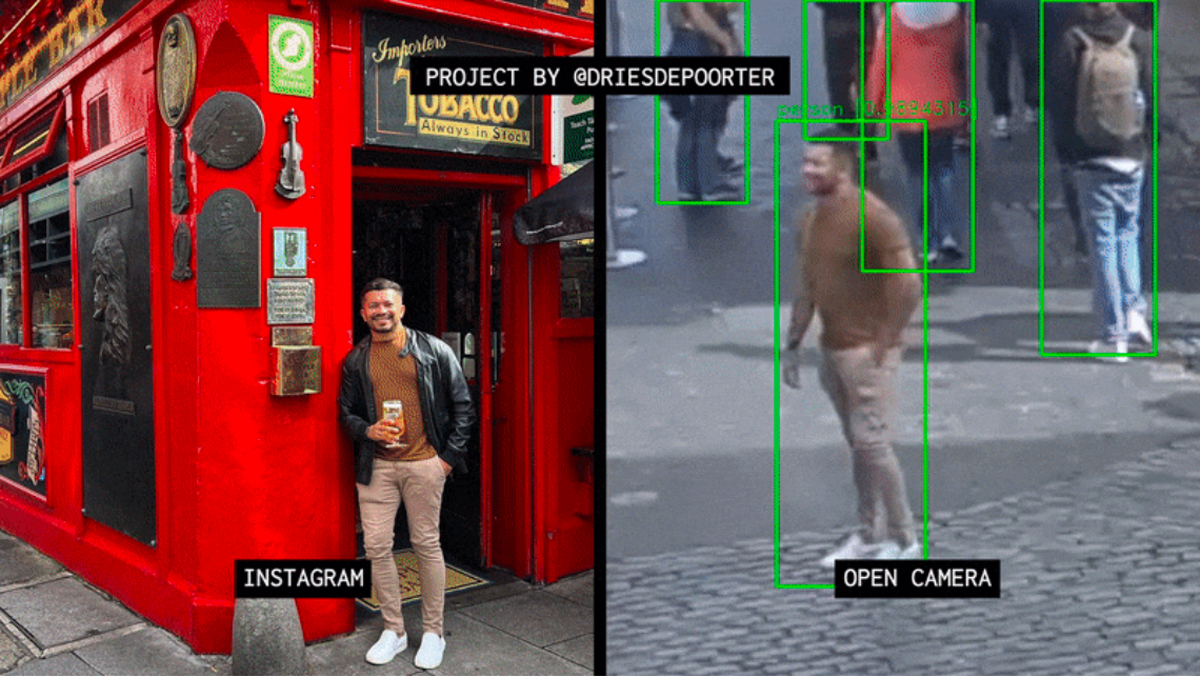

The Follower (2022) is a comment on both distributed surveillance infrastructures and the artificiality of social media identity. Depoorter describes the project very succinctly on his website:

Recorded a selection of open cameras for weeks.

Scraped all Instagram photos tagged with the locations of the open cameras.

Software compares the Instagram with the recorded footage. (driesdepoorter.be, last accessed 3 October 2023)

While the description is quite minimal, there are a number of implications for how the project is designed and presented. In this respect, The Follower went public as a YouTube video (pictured above), showing a carefully curated Instagram portrait of a person on the left and, on the right, a fragment of a video feed demonstrating the careful labour of setting up the pose (Figure 2). Starting from its title, the project is framed as a commentary on surveillance, as well as influencer culture: the subjects selected for the operation were in fact users with over 100,000 followers (Cole, 2022), although their Instagram handles were not included in the video or screenshots. Despite their assumed status as relatively ‘public’ social media individuals, non-consensual depiction within the video was criticised by both commentators and some of the influencers themselves. For example, digital media scholar Francesca Sobande expressed scepticism about using open cameras in the name of art, with the risk of reinforcing a societal state of surveillance that disproportionately impacts marginalised categories (Stokel-Walker, 2022). Nayyar (2022) instead noticed that the faces in the video were left unblurred, and also that some of the users who ended up in it were upset about the artist taking their IG photo and reposting it without consent. (Interestingly, however, the video was apparently taken down due to a copyright violation claim advanced by the open camera website.) From this perspective, the project is akin to what one of the artists interviewed by Stark and Crawford in their survey of AI-focused media art describes as ‘cop art’ (2019: 449) – a type of engagement with technologies that deploys stalking without consent as a way to raise awareness about it.

Dries Depoorter, The Follower (2022). Image courtesy of the artist.

The project’s use of tagging aesthetics is worthy of inquiry in terms of both surveillance and identity performance. The first type of ‘tagging’ is the making visible of machinic classification through the green rectangle, which taps into a dystopian surveillance imaginary, juxtaposed to the human frame preferred by the influencers themselves. Watching the video, we witness the boundary box following the subjects, ‘capturing’ them, as suggested in the Bridges account referenced above. The second type of tagging, the geotagging that enables the addressability and identification of the human subjects involved, is more materially significant. While this process of triangulation is not entirely automatic, tagging is crucial as the missing link between non-contiguous socio-technical systems: machine vision (encompassing the artist, his algorithm, the human subjects and training data) and social media (influencer profiles, their followers and geotags). Linking at least four layers of the ‘stack’ theorised by Benjamin H. Bratton (2015) – user, interface, address, city – The Follower leans heavily into the technical ‘visibility’ affordance of tagging aesthetics, thus revealing a chain of subject relations. In terms of the collective subjectivation described in relation to ImageNet Roulette, however, the project makes a contrasting statement. The performative labour aimed at an imagined audience that is depicted in the video feed might be implicitly framed as inauthentic, vain, or imprudent (also depending on viewer interpretation); it is the geotagging of the posted picture, however, that submits the influencers to algorithmic addressability and eventually ropes them into (artistic) stalking. If Paglen and Crawford’s work prompted people to actively unite in miscategorisation, if only in a gallery or on Twitter, Depoorter’s seems to invite the passive, creeped-out Instagram users to scatter into disconnection (or, at the very least, untagging).

The Follower builds on a previous piece entitled The Flemish Scrollers (2021–2023), which also deploys a public video feed. This time, the machine vision algorithm targeted a YouTube stream of routine Flemish government meetings, recording a clip whenever someone was caught using their phone for more than a few seconds. Images about the project present both the faces of the politicians and their phones framed by bounding boxes, the former labelled with their name preceded by the ‘@’ sign (Figure 3). This choice is significant in terms of tagging aesthetics: @-ing a user is one of the main ways of enacting the relationality characteristic of social media as a socio-technical system. While it does not represent a category, in terms of identity this form of tagging enacts a technical addressability that ‘pulls’ a specific user/subject into a particular conversation, debate, or issue, assuming relatability or demanding attention. This has different implications on Facebook, Twitter or Instagram, depending on both the affordances and the context (Birnholtz et al., 2017; Massanari, 2017; Bozzi, 2021). Given the public status of the people depicted, as well as their institutional position, The Flemish Scrollers definitely has a name-and-shame element to it: the politicians caught wasting time on the voters’ dime are identified automatically, and the clips are published on both Instagram and Twitter. Crucially, posts also include the politicians’ social media handles, so they are officially tagged and addressable for any disappointed citizen who might want to share their opinion of them. The project apparently built on existing media hype about Belgian politicians being distracted at work, but it also has the tongue-in-cheek approach shared by several of Depoorter’s previous artworks, a few of which play with automatic outrage (Wille, 2021). At the time of writing, The Flemish Scrollers’s social media channels have not been updated since 22 May 2023, so it is difficult to check whether users did in fact enforce the material addressability of the parliamentary ‘slackers’, but there was some engagement with the posts and they do remain as a networked memento of Depoorter’s endeavour.

In terms of tagging aesthetics, The Flemish Scrollers also deploys the green rectangle as a discursive reminder of algorithms as ‘objective’ arbiters (in this case, of institutional responsibility), while the social media tagging involved has a very different function than in The Follower. As the politicians captured on their phones are unwittingly roped into the subject triangulation outlined before, the viewing public is implicitly invited to engage as either commenting spectators (if only by virtue of a persisting social media feed that stands as a documentation of the project) or even angered citizens, potentially demanding an unlikely accountability through the @-able usernames of their representatives. The surveilled subjects are thus complemented by the viewing public, stitched together as a polity through tagging.

Both of Depoorter’s projects rely on different social media taggings (@-ing and geotagging) to triangulate with the discursive element of the boundary box, merging machine vision and social media into one socio-technical system. On one side is the machinic gaze, and on the other the social scrutiny of either stalkers/followers or the call-out culture/accountability suggested by @-ing the politicians directly. Despite the artistry behind it all, Depoorter seems to suggest that there is indeed a subjective continuity across this system – something akin to a distributed ‘optical governance’ (De Seta, 2020) sustained by ‘reality anchors’ (Goriunova, 2019: 13) and the ‘digital forensic gaze’ (Lavrence and Cambre, 2020) typical of social media publics. The next artist presents a very different perspective on machine vision and tagging aesthetics.

Embodied Encounter

If Depoorter’s works emphasise the creepy subjectivation potential of digital media by leaning into the visibility afforded by tagging aesthetics, Max Dovey’s work develops the more performative, embodied, aggregative counterpart also mentioned in my analysis of ImageNet Roulette. Dovey’s A Hipster Bar (2015) is especially interesting in terms of tagging aesthetics because it directly addresses the imagination of a collective subject that is culturally determined and cobbled together as a latent algorithmic double, in a process that is aimed for digital failure. The installation consists of an actual bar, presented within an exhibition space, and isolated from the rest of the venue via a (pretty symbolic) lifting gate (Figure 4). At the gate, visitors need to be vetted by an image recognition algorithm that determines whether they are ‘hipster’ enough to enter the bar. Visitors have to wait while their image is processed, and then the matching rate against the idealised ‘hipster’ buried deep in the dataset is shown as a percentage on the screen. If the rate is not high enough, the bar locking the gate will not lift.

While at an immediate level the project seems like a playful satire of pretentious subcultural fetishism and urban gentrification, in terms of subjectivity it taps into several key areas of interest in this paper. First of all: how do you define a hipster? In order to teach the algorithm, Dovey gathered a dataset of Instagram images tagged #hipster, which returned a surprisingly heterogenous sample comprising anything between coffee cups, bearded men, and even dogs. In order to define a hipster, Dovey also had to scrape content featuring the #nonhipster tag, which ironically returned images that were arguably more ‘hipster’ than those tagged directly with the label. Dovey also noted how imagery from non-Western contexts such as Asia had a different tone to them, injecting even more subcultural dysphoria. Eventually, the artist had to regularly tamper with the sorting by making sure there were plenty of human faces in the dataset, so that the algorithm could be used to vet humans at the venues where the installation was on show.

As another example of digital failure, the deliberately flawed process of selection designed by Dovey exposes the elusive nature of cultural stereotypes – in this case an avatar that stitches together pictures of bearded men and glasses along with coffee cups and clothing brands – and how they are impossible to recreate algorithmically. Unlike ImageNet Roulette, but in some ways akin to the affective response to it noted by Bridges, A Hipster Bar conceals the training of the algorithm and focuses on having visitors experience refusal together in an embodied space. The taxonomical label chosen by the algorithm is not made transparent, which opens up a common space for the refused to define themselves outside the ‘hipster’ category. This extemporaneous collective subject is mirrored by the latent algorithmic one, a contradictory, not-quite-representable cultural avatar of pretentious consumerism, trendy affectation, and gentrification (the ‘hipster’) that remains locked inside the box (this time a black one, instead of green). A Hipster Bar does not address representation in a pictorial fashion, yet it does involve it in terms of creating a latent image that, like in the Uliasz account referenced above, in the end might be there or not. The ‘averaged-out hipster’ is never revealed, but a collective social subject based on shared cultural consumption and hashtagging behaviour is constantly evoked.

While the tagging labour inherent to training the algorithm for A Hipster Bar is hidden, other projects by Dovey focus on data cleaning and algorithm training labour, also enacting that labour live, in collaboration with participating audiences. In HITS (2016), Dovey and Manetta Berends adapted an automatic image tagging application into a participatory game show with cash prizes. Dovey describes the experience as a sort of ‘Exquisite Corpse’ where different groups in the audience collaborate across different rounds – downloading images, labelling them, and then passing them on to the developers (Bozzi, 2019). The project can be seen as a comment on immaterial labour, but also on the frantic working schedule of data workers, as mentioned before. Like A Hipster Bar, it taps into the embodied potential of shared experiences, which stand in stark contrast to the practices of the industry. In terms of tagging and subjectivation, the project temporarily activates a collective ‘tagging subject’ that is as much aware of itself (if merely by virtue of sharing a place and time) as of its relation to the machinic other. The ‘anthropological strangeness’ of the yet-to-be-naturalised, mentioned by Bowker and Star (1999: 299), is thus preserved.

Compared to Depoorter’s detached, semi-automated assembly, Max Dovey’s approach to tagging is grounded in embodied encounters, physically gathering people by playfully associating them with the ‘hipster’ tag or having them tag data in the same room. These operations assemble a different kind of subject, contingent to lived space and shared experiences of (mis)categorisation. The focus on cultural consumers and data cleaners also adds a class element to the experience, something that resonates with Franco ‘Bifo’ Berardi’s proposal to reprogramme the relationship between technology and life, starting from work and the subjectivation of cognitive workers (2018: 79). Noticing that globalisation allows the movement of economic flows and not people, thus disconnecting the mind from the social body, Berardi calls for a new techno-poetic platform for the collaboration of cognitive workers worldwide (156). The word ‘poetic’ is very important, as Berardi shares the Guattarian inspiration of relational aesthetics: while capitalism produces semiotic models that constrain social imagination, the content within those models can create possibilities that exceed their capitalist container. Significantly, the way out needs to come from an ‘ethico-aesthetic intuition’ (2018: 180–181). Perhaps Dovey’s work can be the place where the ‘intuition machines’ (Kronman, 2020) of machine vision come together with the labelling workers and the miscategorised public to assemble a socio-technical subject that is not extracted from the social body, but tightly enmeshed with it.

Conclusion

So, what is the value of tagging aesthetics within media theory and new media art critique? Compared to the fuzziness of the more broadly defined ‘relational aesthetics’ (Bourriaud, 2002), tagging aesthetics rely on techno-social ‘anchors’ to provide material orientation within increasingly naturalised megastructures like the ‘Stack’ (Bratton, 2015) or the ‘metainterface’ (Pold and Andersen, 2018). Beyond this theoretical purchase, however, there are also political considerations to be made.

New media artists are often concerned with surveillance and even driven by policy-oriented goals, acting between the realms of hackers and activists. However, demonstrating the workings of a technical infrastructure might not work without conjuring up some kind of subject that is salient to it. Given the challenge of representation inherent to machine vision, then, artists dealing with this technology have to deal not only with images and what they depict, but also often participate in a process of subject enactment and concatenation. As a thing and an action, a reductive representation and a productive connection, tagging exemplifies a material and discursive contribution to collective socio-technical subjectivation.

Discussed through the work of the different artists analysed above, tagging aesthetics demonstrate different relational attitudes towards the subjects of both machine vision and social media, as experiences of connection that might not always be at the centre of other AI-driven artworks. These relational attitudes – (dis)identification, semi-automated assembly, and embodied encounter – grant different degrees of material agency. However, their social, cultural and political resonance will vary vastly depending on the subjects, identities and cultural figures that become entangled in those configurations. To be sure, some of these artistic experiments are ironic, playful and rather general. Future explorations of tagging aesthetics might tackle identity markers in more fraught or less explored contexts, delving deeper into the political implications of these artistic techniques.

While an obvious difference is the impact they had, discernible through published statements and third-party commentaries, this paper has looked at each artwork as an assemblage of heterogenous subjects, rather than an activist action. Such assemblage is operated through different taggings – the discursive surfacing of the green rectangle, the enactment of data labelling, and the active or passive plugging-into social media conversations. Geotagging, @-ing and data labelling remain different techno-social gestures, but the performative affinity between each act of tagging can gesture towards a possibility of (re)assembly, an establishment of commonality that manifests moments of temporary proximity, and an invitation to denaturalise the labels that keep us separate. Beyond awareness, tagging speaks to the inherent agency users have, if anything by temporarily ‘becoming’ or ‘unbecoming’ techno-cultural subjects. Far from being a tool for mapping knowledge and essentialised identities, tagging aesthetics are ways to perform the techno-social and shape future cultural encounters with various forms of others.

Acknowledgements

The author wishes to thank both the collection’s editors and the peer reviewers for their invaluable feedback and advice on this paper. Also thanks to the artists involved who agreed for images of their work to be used within the article.

Competing Interests

The author has no competing interests to declare.

References

Aguado, C 2011 Facebook or face bank. Loyola of Los Angeles Entertainment Law Review, 32(2): 187–228.

Amaro, R 2023 The Black Technical Object: On Machine Learning and the Aspiration of Black Being. London: Sternberg Press.

Amoore, L 2013. The Politics of Possibility: Risk and Security Beyond Probability. Durham, NC: Duke University Press. DOI: http://doi.org/10.1515/9780822377269

Aradau, C and Bunz, M 2022 Dismantling the apparatus of domination?: Left critiques of AI. Radical Philosophy 212, Spring 2022: 10–18.

Beekmans, J 2011 Check-in Urbanism: Exploring Gentrification through Foursquare Activity. Unpublished thesis (MSc). University of Amsterdam, Graduate School of Social Sciences.

Berardi, F 2018 Futurabilità. Rome: Nero Editions.

Bernard, A 2019 A Theory of the Hashtag. New York, NY: John Wiley & Sons.

Birnholtz, J, Burke, M and Steele, A 2017 Untagging on social media: Who untags, what do they untag, and why? Computers in Human Behavior, 69: 166–173. DOI: http://doi.org/10.1016/j.chb.2016.12.008

Bishop, C 2004 Antagonism and Relational Aesthetics. October, 110: 51–79. DOI: http://doi.org/10.1162/0162287042379810

Bostrom, N 2014 Superintelligence: Paths, Dangers, Strategies. Oxford: Oxford University Press.

Bowker, G and Leigh-Star, S 1999 Sorting Things Out. Classification and Its Consequences. Cambridge, MA: MIT University Press. DOI: http://doi.org/10.7551/mitpress/6352.001.0001

Bourriaud, N 2002 Relational Aesthetics. Dijon: Les Presses Du Reel.

Bozzi, N 2019 Tagging Aesthetics #4: (Machine) Learning Stereotypes. Interview with Max Dovey. Digicult. http://digicult.it/internet/tagging-aesthetics-4-machine-learning-stereotypes-interview-with-max-dovey/ [Last accessed 31 May 2020].

Bozzi, N 2020a #digitalnomads, #solotravellers, #remoteworkers. A Cultural Critique of the Travelling Entrepreneur on Instagram. Social Media + Society: Special Issue: Studying Instagram Beyond Selfies. DOI: http://doi.org/10.1177/2056305120926644

Bozzi, N 2020b Tagging Aesthetics: From Networks to Cultural Avatars. APRJA – A Peer-Reviewed Journal About. DOI: http://doi.org/10.7146/aprja.v9i1.121490

Bozzi, N 2021 Dramatization of the @Gangsta: Instagram Cred in the Age of Glocalized Gang Culture. In: Julie B W (Ed.) Theorizing Criminality and Policing in the Digital Media Age. Bingley, UK: Emerald Publishing. DOI: http://doi.org/10.1108/S2050-206020210000020010

Bratton, B H 2015 The Stack. On Software and Sovereignty. Cambridge, MA: MIT University Press. DOI: http://doi.org/10.7551/mitpress/9780262029575.001.0001

Bridges, L E 2021. Digital failure: Unbecoming the “good” data subject through entropic, fugitive, and queer data. Big Data & Society, 8(1). DOI: http://doi.org/10.1177/2053951720977882

Buolamwini, J and Gebru, T 2018 Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. Proceedings of Machine Learning Research, 81: 1–15.

Burrell, J 2016 How the machine ‘thinks’: Understanding opacity in machine learning algorithms. Big Data & Society, 3(1). DOI: http://doi.org/10.1177/2053951715622512

Carah, N and Angus, D 2018 Algorithmic brand culture: participatory labour, machine learning and branding on social media. Media, Culture & Society, 40(2): 178–194. DOI: http://doi.org/10.1177/0163443718754648

Catanzariti, B and Bennett, S 2022 Hidden Humans in the Loop: Investigating representations of data work in machine learning practice. ACM CHI Conference on Human Factors in Computing Systems.

Celis Bueno, C and Schultz Abarca, M J 2021 Memo Akten’s Learning to See: From machine vision to the machinic unconscious. AI & Society, 36: 1177–1187. DOI: http://doi.org/10.1007/s00146-020-01071-2

Chun, W H K 2016 Updating to Remain the Same: Habitual New Media. Cambridge, MA: MIT University Press. DOI: http://doi.org/10.7551/mitpress/10483.001.0001

Chun, W H K 2018 Queering Homophily. In: Apprich, C et al. (Eds.) Pattern Discrimination. Minneapolis: University of Minnesota Press, pp. 59–98.

Chun, W H K 2021 Discriminating Data: Correlation, Neighborhoods, and the New Politics of Recognition. Cambridge, MA: MIT University Press. DOI: http://doi.org/10.7551/mitpress/14050.001.0001

Cole, S 2022 Artist Uses AI Surveillance Cameras to Identify Influencers Posing for Instagram. Vice, 12 September. https://www.vice.com/en/article/g5vj79/artist-uses-ai-surveillance-cameras-to-identify-influencers-posing-for-instagram [Last accessed 4 April 2023].

Dame, A 2016 Making a name for yourself: tagging as transgender ontological practice on Tumblr. Critical Studies in Media Communication, 33(1): 23–37. DOI: http://doi.org/10.1080/15295036.2015.1130846

Dean, J 2017 Faces as Commons: The Secondary Visuality of Communicative Capitalism. Open! https://www.onlineopen.org/download.php?id=538 [Last accessed 18 June 2023].

De Kosnik, A and Feldman, K 2019 #identity: Hashtagging Race, Gender, Sexuality, and Nation. Ann Arbor, MI: University of Michigan Press. DOI: http://doi.org/10.3998/mpub.9697041

Design Lab 2020 AI at the Edgelands: Data Analytics in States of In/Security | Lucy Suchman | Design@Large [keynote speech panel video]. Youtube. https://www.youtube.com/watch?v=PugFcwrhr6k [Last accessed 19 October 2023].

Dougherty, C 2015 Google photos mistakenly labels black people ‘gorillas.’ The New York Times, 1 July. http://bits.blogs.nytimes.com/2015/07/01/google-photos-mistakenly-labels-black-people-gorillas/ [Last Accessed 18 June 2023].

Farocki, H 2004 Phantom Images. Public, 29: 12–22.

Finn, E 2017 What Algorithms Want. Imagination in the Age of Computing. Cambridge, MA: MIT University Press. DOI: http://doi.org/10.7551/mitpress/9780262035927.001.0001

Florini, S 2019 Beyond Hashtags. Racial Politics an Black Digital Networks. New York University Press. DOI: http://doi.org/10.18574/nyu/9781479892464.001.0001

Geboers, M A and Van De Wiele, C T 2020 Machine Vision and Social Media Images: Why Hashtags Matter. Social Media + Society, 6(2). DOI: http://doi.org/10.1177/2056305120928485

Gogarty, L A 2022 Inert Universalism and the Info-Optimism of Legibly Political Art. Selva. https://selvajournal.org/article/inert-universalism/ [Last Accessed 18 June 2023].

Goriunova, O 2019 Face abstraction! Biometric identities and authentic subjectivities in the truth practices of data. Subjectivity, 12: 12–26. DOI: http://doi.org/10.1057/s41286-018-00066-1

Goriunova, O 2020 Humans Categorise Humans: on “ImageNet Roulette” and Machine Vision. Donaufestival Reader. Donaufestival, Austria.

Hayles, N K 1999 How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics. Chicago: University of Chicago Press. DOI: http://doi.org/10.7208/chicago/9780226321394.001.0001

Klingemann, M 2018 Mario Klingemann – Artist Profile (Photos, Videos, Exhibitions). Aiartists.org. https://aiartists.org/mario-klingemann [Last accessed 3 October 2023].

Kronman, L 2020 Intuition Machines: Cognizers in Complex Human-Technical Assemblages. APRJA – A Peer-Reviewed Journal About. DOI: http://doi.org/10.7146/aprja.v9i1.121489

Lavrence, C and Cambre, C 2020 ‘Do I Look Like My Selfie?’: Filters and the Digital-Forensic Gaze. Social Media + Society, 6(4). DOI: http://doi.org/10.1177/2056305120955182

Leigh-Star, S and Griesemer, J R 1989 Institutional Ecology, ‘Translations’ and Boundary Objects: Amateurs and Professionals in Berkeley’s Museum of Vertebrate Zoology, 1907–39. Social Studies of Science, 19(3): 387–420. DOI: http://doi.org/10.1177/030631289019003001

Losh, E 2019 Hashtag. New York, NY: Bloomsbury. DOI: http://doi.org/10.5040/9781501344305

Manovic, L 2018 AI Aesthetics. Moscow: Strelka Press.

Massanari, A 2017 #Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media and Society, 19(3): 329–346. DOI: http://doi.org/10.1177/1461444815608807

Muñoz, J E 1999 Disidentifications: Queers of Color and the Performance of Politics. Minneapolis, MN: University of Minnesota Press.

Nayyar, R 2022 Artist slammed for matching Instagram photos to open-camera footage. Hyperallergic, 20 September. https://hyperallergic.com/762604/artist-slammed-for-matching-instagram-photos-to-open-camera-footage/ [Last Accessed 4 April 2023].

Noble, S U 2018 Algorithms of Oppression. New York: New York University Press.

O’Neil, C 2016 Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Crown Publishing.

Paglen, T and Crawford, K 2019 Excavating AI The Politics of Images in Machine Learning Training Sets. https://excavating.ai [Last Accessed 10 April 2023].

Parry, K 2022 A Theory of Assembly: From Museums to Memes. Minneapolis: University of Minnesota Press.

Pold, S B and Andersen, C U 2018 The Metainterface: The Art of Platforms, Cities, and Clouds. Cambridge, MA: MIT Press. DOI: http://doi.org/10.7551/mitpress/11041.001.0001

Rettberg, J W, Gunderson, M, Kronman, L, Solberg, R and Stokkedal, L H 2019 Mapping Cultural Representations of Machine Vision: Developing Methods to Analyse Games, Art and Narratives. ACM Hypertext Proceedings: 97–101. DOI: http://doi.org/10.1145/3342220.3343647

Ruiz, C 2019 Leading online database to remove 600,000 images after art project reveals its racist bias. The Art Newspaper, 23 September. https://www.theartnewspaper.com/2019/09/23/leading-online-database-to-remove-600000-images-after-art-project-reveals-its-racist-bias [Last Accessed: 18 June 2023].

Sartori, L, Bocca, G 2022 Minding the gap(s): public perceptions of AI and socio-technical imaginaries. AI & Society, 38: 443–458. DOI: http://doi.org/10.1007/s00146-022-01422-1

Scheuerman, M K, Wade, K, Lustig, C and Brubaker, J R 2020 How We’ve Taught Algorithms to See Identity: Constructing Race and Gender in Image Databases for Facial Analysis. Proceedings of the ACM on Human-Computer Interaction, 4(CSCW1): 1–35. DOI: http://doi.org/10.1145/3392866

Spartin, L and Desnoyers-Stewart, J 2022 Digital Relationality: Relational aesthetics in contemporary interactive art. Proceedings of EVA London 2022. DOI: http://doi.org/10.14236/ewic/EVA2022.29

Stark, L and Crawford, K 2019 The Work of Art in the Age of Artificial Intelligence: What Artists Can Teach U s About the Ethics of Data Practice. Surveillance & Society, 17(3/4): 442–455. DOI: http://doi.org/10.24908/ss.v17i3/4.10821

Steyerl, H 2016 A sea of data: Apophenia and pattern mis-recognition. e-flux Journal, 72. https://www.e-flux.com/journal/72/60480/a-sea-of-data-apophenia-and-pattern-mis-recognition/ [Last Accessed 18 June 2023].

Stokel-Walker, C 2022 A surveillance artist shows how Instagram magic is made. Input, 13 September. https://www.inverse.com/input/culture/dries-depoorters-ai-surveillance-art-the-follower-instagram-influencers-photos [Last Accessed 4 April 2023].

Uliasz, R 2021 Seeing like an algorithm: operative images and emergent subjects. AI & Society, 36: 1233–1241. DOI: http://doi.org/10.1007/s00146-020-01067-y

Vander Wal, T 2007 Folksonomy Coinage and Definition. http://vanderwal.net/folksonomy.html [Last Accessed 10 April 2023].

Virilio, P 1994 The vision machine. Indianapolis: Indiana University Press.

Wille, M 2021 This AI bot publicly shames politicians over their smartphone use. Input, 6 July. https://www.inverse.com/input/tech/this-ai-bot-proves-politicians-are-addicted-to-their-phones-too [Last Accessed 4 April 2023].

Zylinska, J 2020 AI Art: Machine Visions and Warped Dreams. Open Humanities Press.